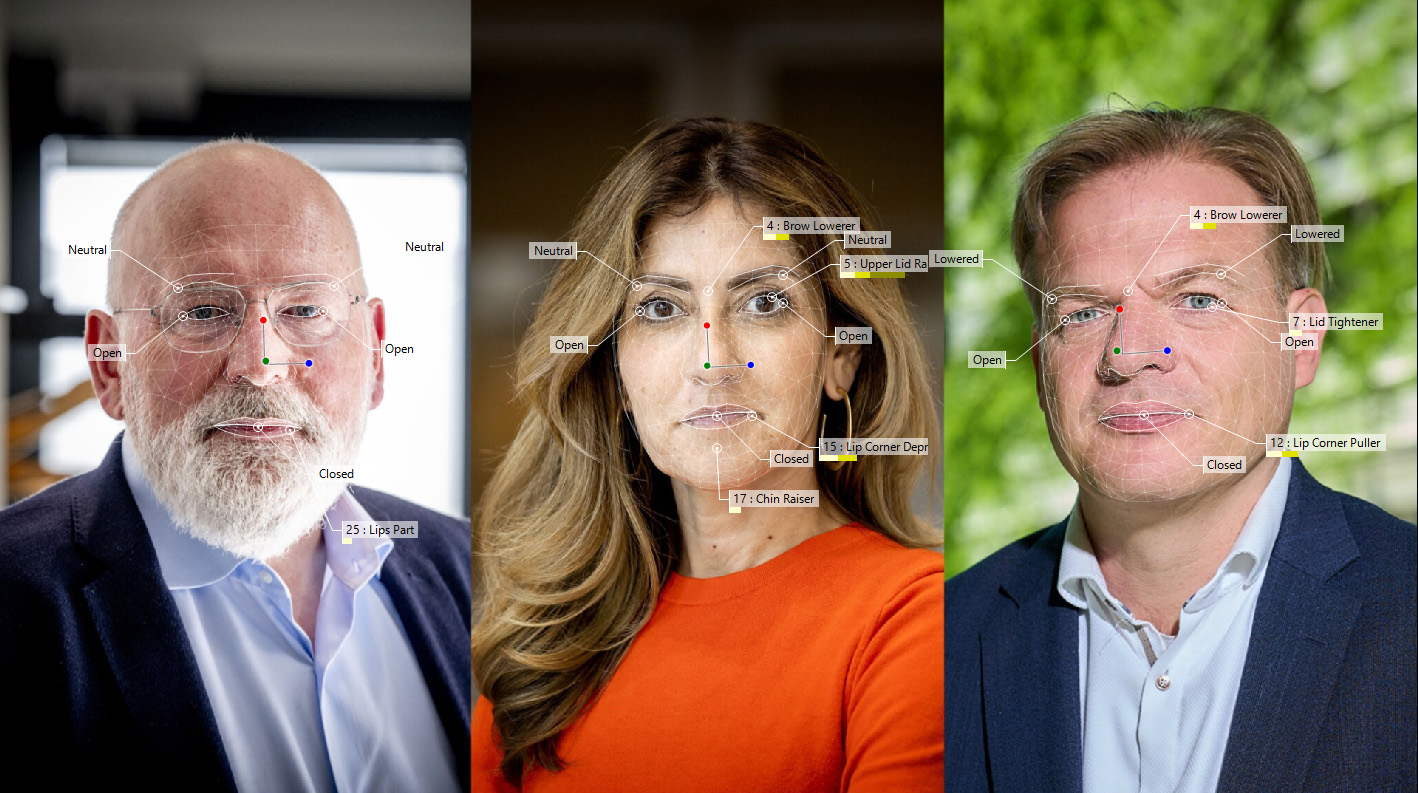

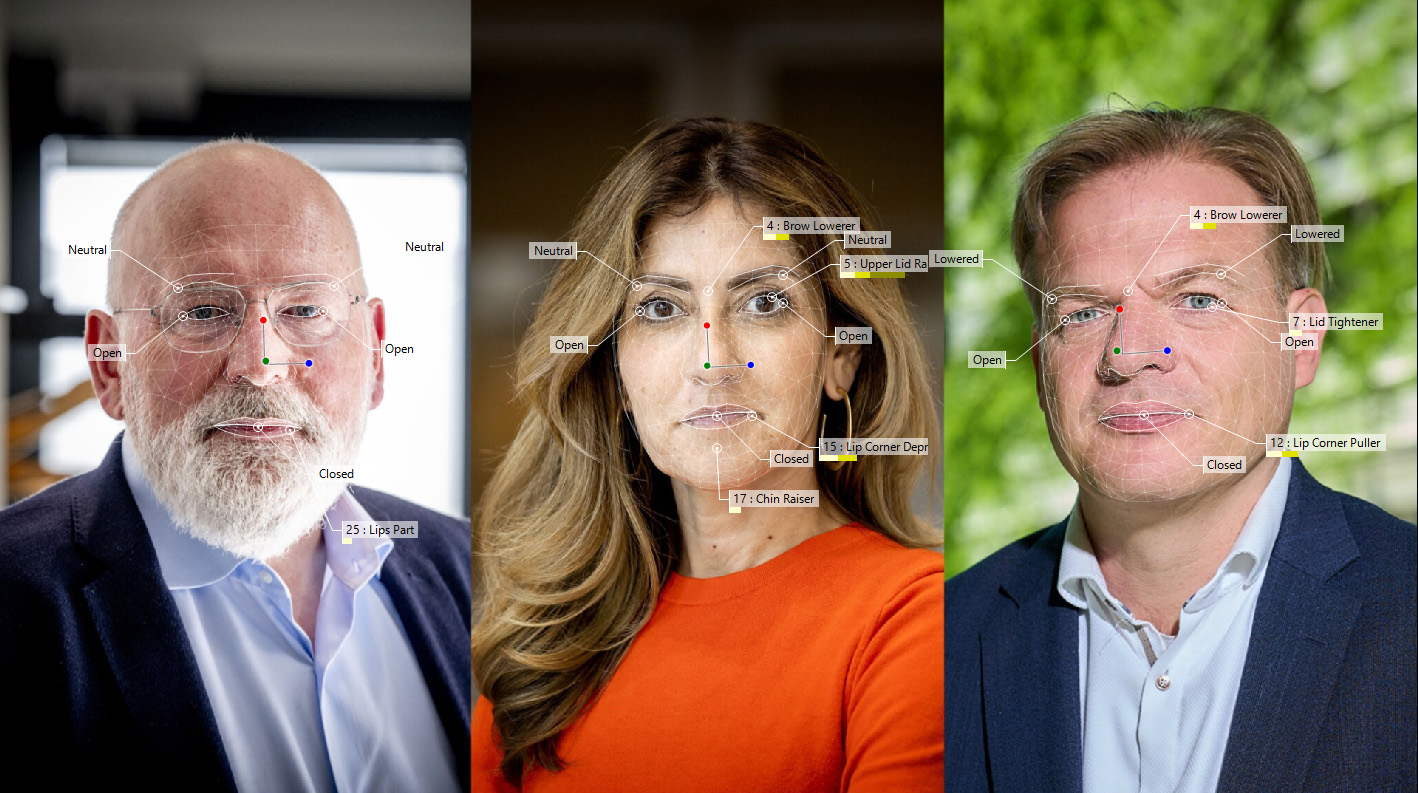

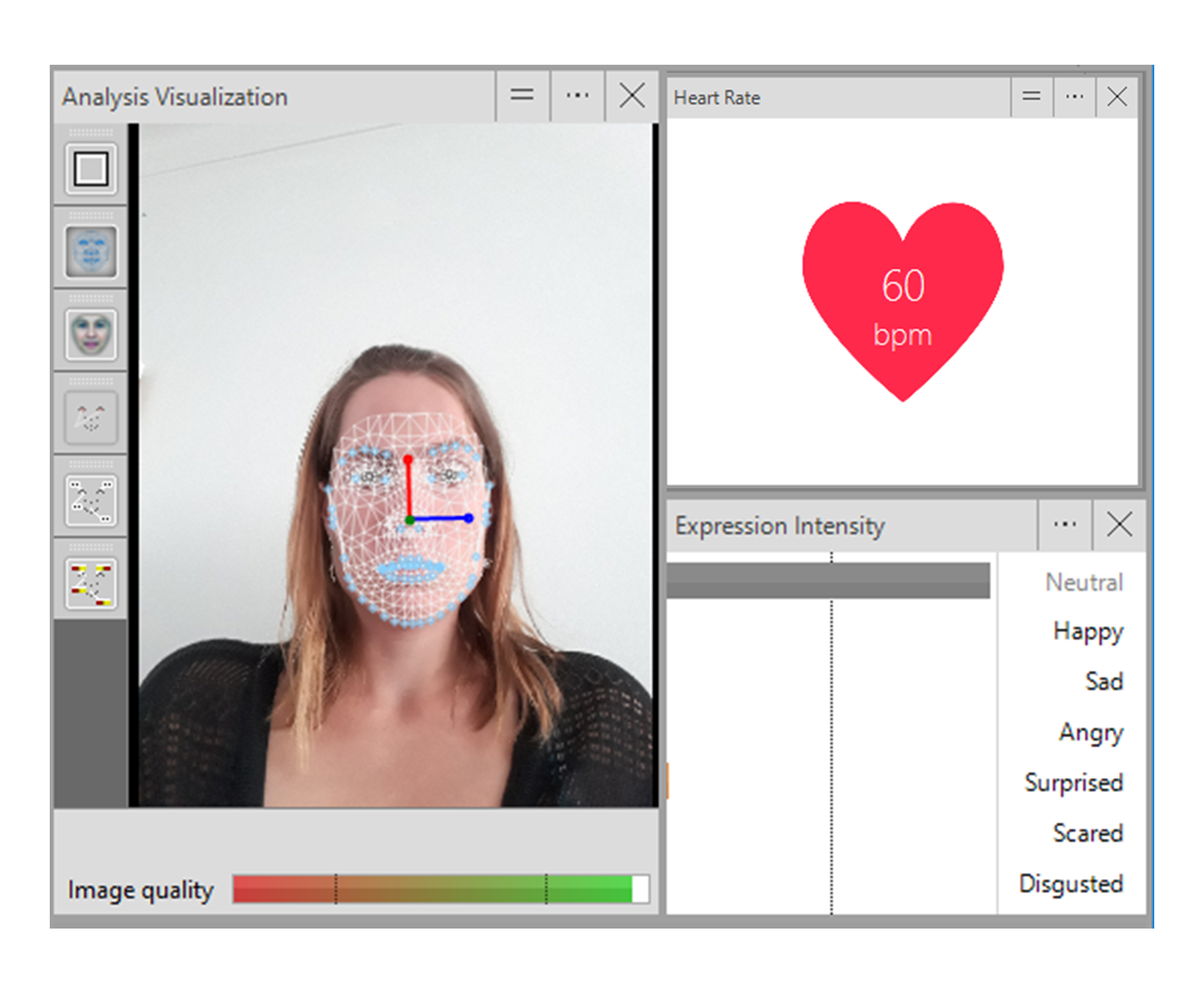

Introducing FaceReader 9.1: Enhanced Facial Analysis for Greater Precision and Functionality

New website for CAS research project on injury-free exercise

FaceReader 9 Release – Improved Analysis Performance and Possibilities

Visitors can Experience AI with the Mirror Cube in the Vienna Technical Museum

First Results of our Mobile Health Innovation Project

FaceReader 8 release! – More flexibility and extra possibilities